Generating a page within 800ms is considered a normal result.

However, such time-consuming rendering is far from satisfactory.

Let's look into the challenges of different project types:

Average load project

High load projects

Demanding high load projects

All these project types have good or barely good Core Web Vitals, which is far from an excellent result.

Vanilla application always performs better than customized. If third-party extensions and custom modules are set up the rendering time might be doubled or tripled. We aren't going to operate numbers for vanilla platforms, let's consider numbers for a final project with AVG amount of customizations.

Given that AVG page rendering is equal to 600ms. If we make a single PHP template from it, where all

variables are available,

the pure PHP will render the page within 1-2 milliseconds, depending on HTML size.

The remaining 598ms the application will spend on collecting data and calculating the layout. The deal is that traditional webapp calculates the number of things on the fly and that might stay the same for years. Secondly, collecting data from the database is not a super-fast operation, a page might require data from hundreds of tables. Thirdly, the code used during rendering is way more empowered functionally than frontend needs. The same models are used by API, in admin panel, by cron jobs, etc. - it must cover all the aspects and have more layers of abstraction and, as a result, become heavier.

Any platform is quick on its start, but many implementations have congenital performance degradation from the very beginning architecture. This leads to continuous and confident degradation and no option to fix it except a full rebuild.

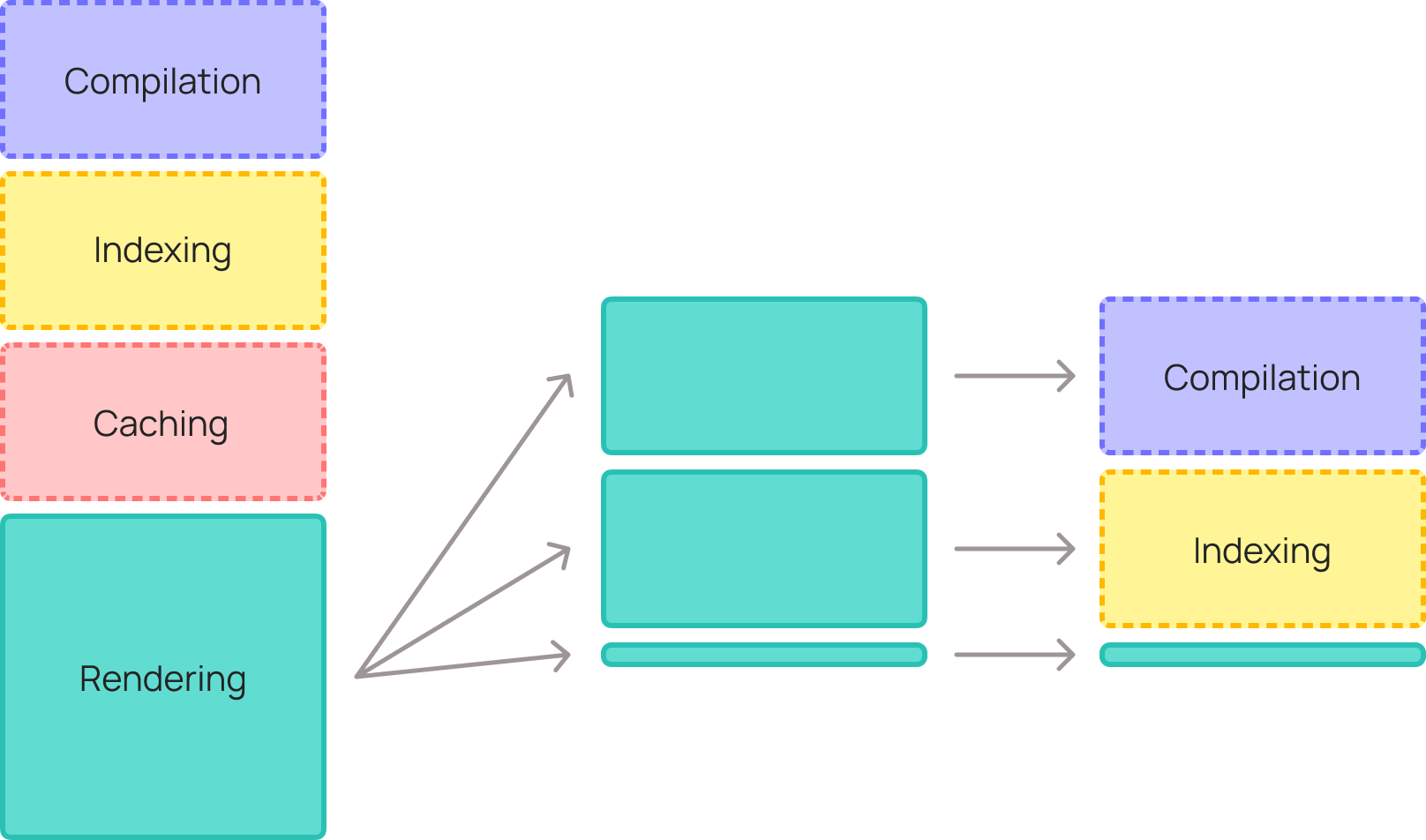

To make rendering performance good enough the following well-known approaches are widely used:

If we analyze rendering code deeper, we will find that 99% of it still can be either compiled or indexed. We need to sort out the application code and migrate it into relevant areas.

Compiled data is opcached thus takes microseconds to access. Indexing on entity level can provide most of the data by a single read operation.

Eventually, we need 3 main data indices:

In addition, the user session, which isn't an actual index but has to be read by the page.

Indexed data is stored as objects and accessed on key-value bases. The speed of fetching and unserializing an object depends on storage, amount of data, and a raw object format. Redis using JSON in AVG will take 1.5ms to perform 4 reads and unserialization. MySQL using JSON - 3ms.

As demonstrated, in our final scheme there is no caching as it doesn't bring any benefits, except very specific cases. All of us got used to the cache saving the day, but now we can start getting used to how easy and limitless development is without the cache.

The only thing we miss is the engine which will organically bind all application parts and satisfy modern web-development needs: modularity, layout, templating, object manager, plugins, etc. If the engine is built with respect to every millisecond, it won't give overhead more than 1-3ms.

New application based on Coin Concept can render a page in 3-7 milliseconds. It will require additional memory to store indices but will give a boost in hundreds of times.

It's not required to implement the concept on the every website page. If a website has 20 different page types, making top 5 of them fast will already make 80% of value.

Worth mentioning that there will be exceptions when generating time exceeds 10ms, for example:

Indices are continuously updated in the background on data changes. If indexer is based on direct database access and processes items in batches, in the worst case scenario it will make 800 indices per second or 100K in 2 minutes.

In normal mode, the indexer delay in publishing data changes will be 1-3 seconds.

To reach the best performance the indexer should meet the following criteria:

For demanding and continuously developed projects, the Coin approach could save hundreds and thousands of hours which are invested into continuous mitigating performance degradation, resolving incidents, more complex caching strategies and so on.